Are Your Email Donors Different from Your Mail Donors?

The polls got a relative drubbing in 2016 but their long-term win rate is off the charts. One 2016 theory alive and well going into 2022 was polls suffered from response bias, the people willing to be polled had different candidate preference from those showing up to vote.

More specifically, Republican voters were less willing to take a poll because they trust the media less and therefore, 2016 polling under-represented the Trump vote.

When we conduct donor surveys we typically include only those with email address as the survey is online and we email a private, individualized URL. People wonder if this creates response bias.

The question isn’t whether people who participate are different those that don’t, it’s are they different in ways that matter to the outcomes you care about – e.g., candidate preference or motivations to donate.

The New York Times political polling operation ran a novel experiment to try and explore the underrepresented Republican voter theory. They ran their normal phone poll alongside a mailed version of the survey (that could be mailed back or completed online) with a $25 incentive to participate.

We’ve done similar parallel survey work (minus the financial incentive) sending a postcard with a printed, survey URL to a sample of donors with no email address.

What did the Times find? Incentives work. The response rate to the mailed survey with $25 got a massive 35% response rate compared to a paltry but typical 1.6% response rate.

Surely the response rate difference alone makes the mail survey more accurate, right? Sampling is like a huge vat of soup on the stovetop. I don’t need to drink the whole pot to know how it tastes as long as it’s stirred well. My sample taste, a few drops of the total vat, is all I need. A larger sample size doesn’t automatically make it better.

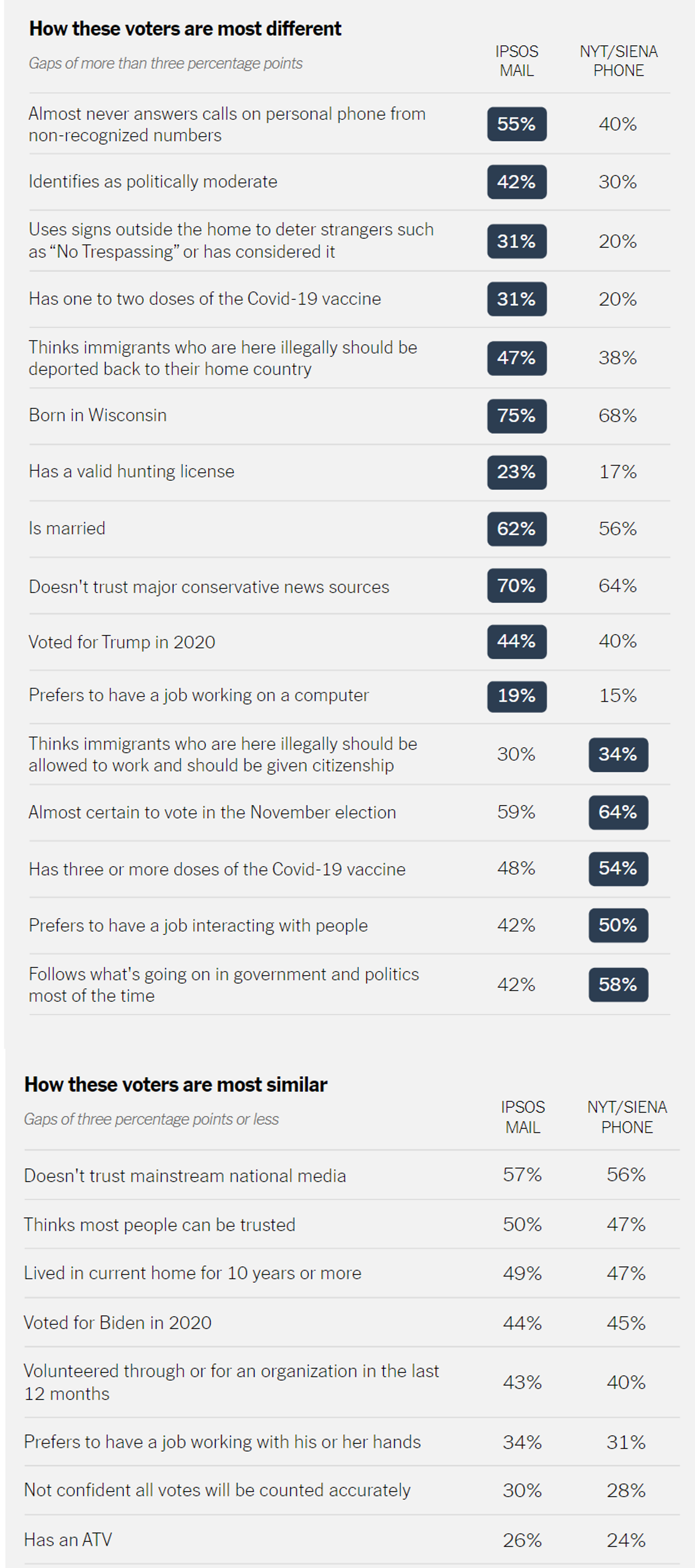

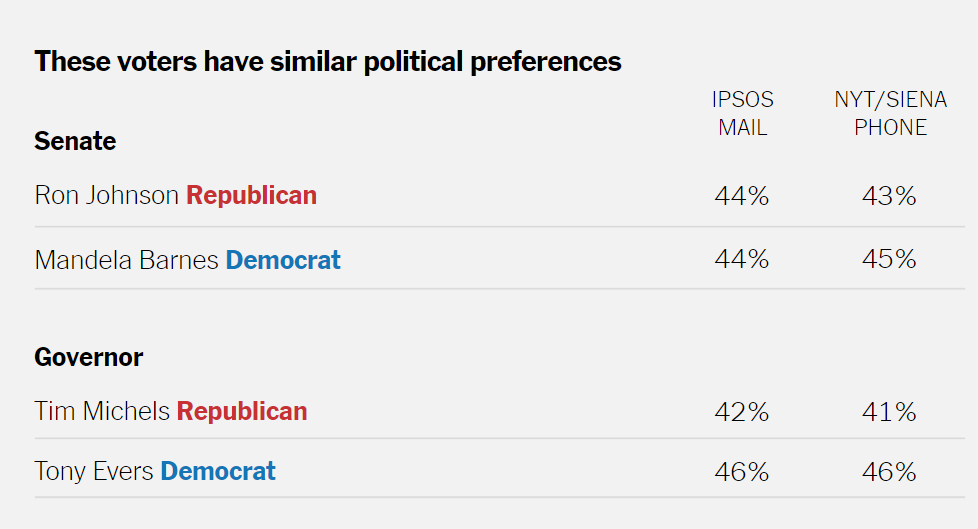

What did they find? Radically different profiles, right? The won’t answer the phone crowd is anti-social, less likely to be fully dosed, more nativist, anti-immigrant, not “educated” on politics…

Oh but wait, they equally distrust media and voted for Biden in 2020. This profile data suffers from several problems,

- It’s descriptive, not predictive.

- The longer the list of differences the more we think these differences matter. After all, we wrote the survey and we wouldn’t have asked about things that don’t matter.

- Anytime you look at two groups of people and compare results in this way, you’ll see differences but much of it is noise, not signal.

- These profiles get our brains painting mental pictures and mini stories that we’re automatically inclined to think are real and matter because we concocted them.

Do the differences matter to what NYT polling cares about? Not a lick. And the polling was very accurate when compared to Election Day reality.

Our parallel surveys with donors have the same story. If I profile them on demographics we’ll see some differences. And those meaningless differences lead to picture painting, which is why we skip all of that reporting.

Your email donors aren’t likely different from your mail donors on things that matter to why they support. And that applies to damn near every superficial way we may group people.

Kevin