Testing Goes To Pot

Last year, the Journal of the American Medical Association for Internal Medicine published a blockbuster finding – April 20th has a 12% elevated fatal crash risk, which is statistically significant. This doesn’t sound like a blockbuster finding until you factor in that April 20 is also a very unofficial holiday for using marijuana. The authors claimed that this likely indicates marijuana use doubles your fatal crash risk.

A statistically significant result. Case closed. Right? That’s often what we would do in our direct marketing test: we get a result, it differs from the control, we call it, and we move on to the next result.

But it’s important to think about what statistical significance means. Steve Rudman of Concord Direct illustrated this well on a recent webinar. He had a clear winner in his three-panel test. The only problem was the printer had accidentally sent out the same piece to all three groups. There was a statistically significant difference in results, but no difference in the mail pieces. I talk about the same thing here – a 15-panel test I did with the same package being mailed out that showed wide variations in average gift and response rate.

So, statistical significance at a .05 level means there’s a 5% chance that the results could have happened by random chance.

Going back to our JAMA/April 20 study, there are 365 days in the year. That means if crash risk were completely random, there should be about 18 days that have a statistically significantly elevated crash risk. That’s kinda what random means.

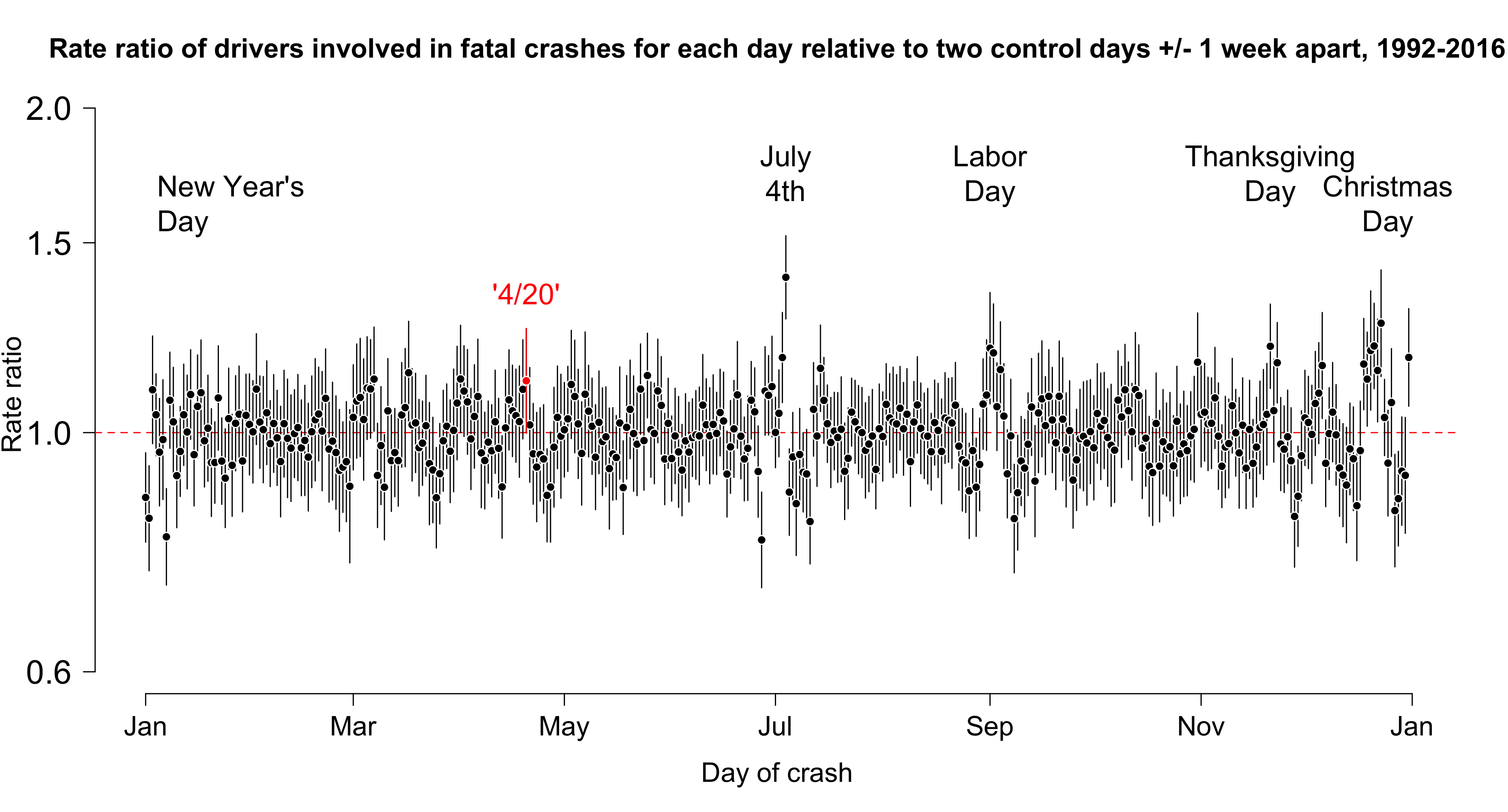

Well, here’s what happens if you look at every day using the methodology from the original JAMA article (thanks to this article for the analysis):

Turns out 20 days had a higher relative crash rate than April 20th. The list is part days where people have days off and no familial obligations – aka drinking holidays – like Fourth of July, the Third of July, Halloween, New Year’s Eve, and days on which Memorial and Labor Day often fall and days where no explanation jumps to mind. I don’t think we going to be seeing a “December 6th is one of the deadly days on the roads” PSA any time soon, but it has statistical significance over time just like 4/20.

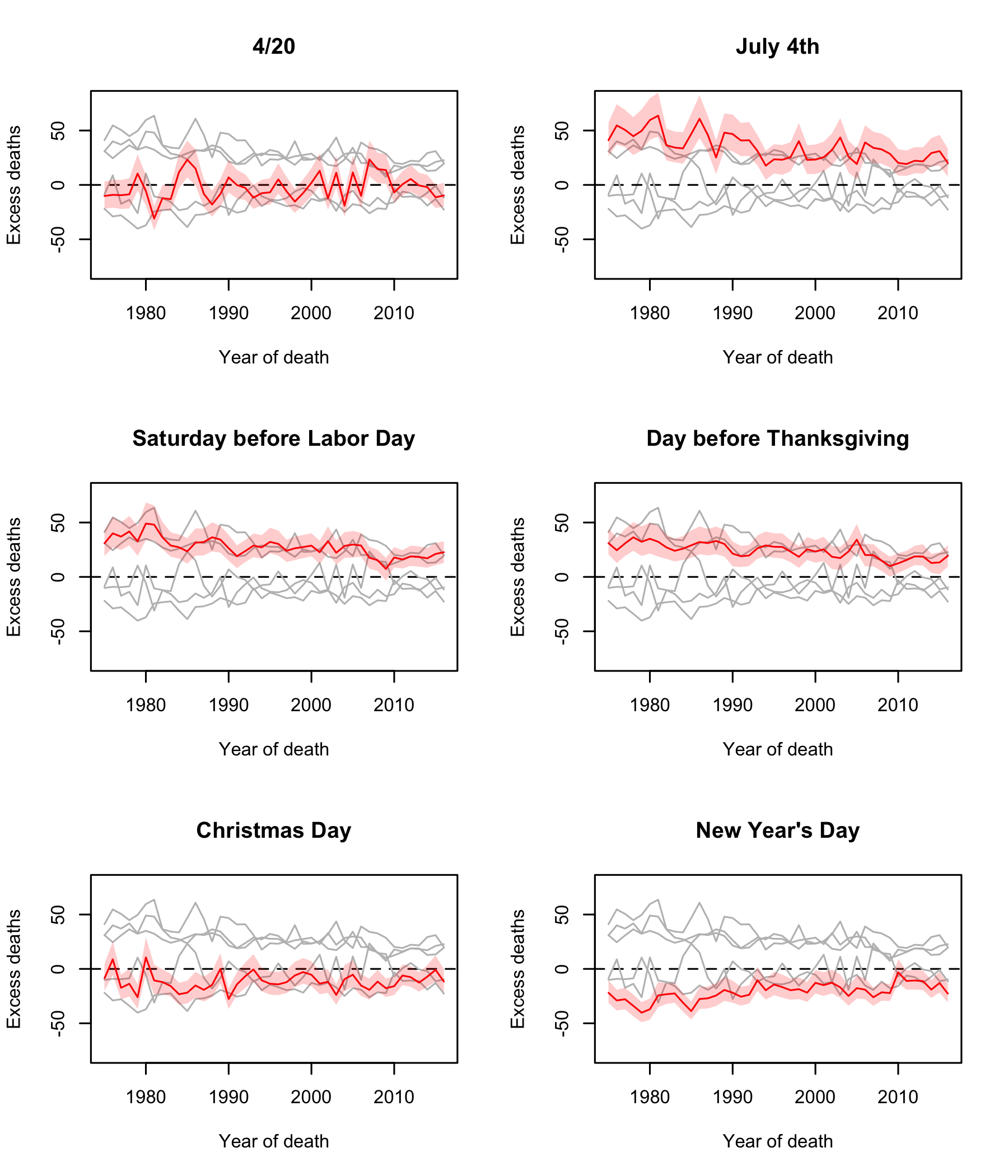

So let’s think about this. 4/20 as a “holiday” became more widespread in about 2006. Did we see fatal car crashes jump up around then? No. But we do see other holidays with more persistent effects over time:

So what’s the answer? I don’t know for certain. And that is, and should be, our answer for many things in our testing.

Because random chance happens. Even if you did three tests independently that had statistical significance at the .05 level, there’s still a .0125% likelihood that it happened randomly.

By the same token, if you come up with a result that isn’t statistically significant, it still contributes to our knowledge. .05 isn’t a magic number where .049 is touched by the hand of whatever as big-T Truth and .051 is dreck.

Rather, we can all be Bayesians. Not in the sense of being the English clergyman whose greatest contribution to knowledge is published after death. But using the spirit of the Bayes Theorem that talks about certainty. To de-math the theorem, it says:

Old belief + new evidence * the strength of that evidence = new belief.

And all these beliefs are somewhere between certainty yes and certainty no. Every belief is a shade of grey.

We have beliefs. We run the tests not to prove or disprove our beliefs, but to add or subtract support from them.

It’s also why we try to either go inexpensive with our testing with Facebook ads (some interesting tests from Kiki Koutmeridou from last week’s Behavioral Science Symposium here) or broad with our testing as with the DonorVoice Pre-Test Tool as demonstrated here.

And if you’d like to see this type of Bayesian thinking in action, I recommend Erica Best’s presentation from the Symposium below. They saw data from research that their initial approach wasn’t optimal. They tested it in the Pre-Test Tool. Then they did five live mail tests and one online ad test on it. There were five tests in support and one with mixed results, but they think they know why that happened, but they are going to test that hypothesis:

It’s a great example of a cycle of testing, learning, and refining that we can all model.

Nick

P.S. An aside from this former traffic safety guy to interject: pot-impaired driving is just that– pot-impaired. You’ve probably read enough drug labels in your life that your prior knowledge is that putting mind-altering chemicals into your body and then piloting any form of craft don’t mix. As to the literature, there’s debate over the level of impairment or the increase in crash risk, but there’s not much uncertainty as to whether it is impairing. So please don’t drive high.

Consider, too, that many of those special holidays may have elevated amounts of driving behavior (more people traveling to in-laws for dinner, or to the airport to travel, etc.). This may be offset by a reduced amount of “standard” driving, too.

Unless you have a standard baseline (like # of miles driven per day), just having the variation per day, even if it gives a statistically significant result, doesn’t say that much.

Reminds me of the XKCD comic about jelly beans and the dangers of the “only statistically significant results are publishable” culture which has developed in the scientific community.

https://www.xkcd.com/882/

Thanks for the reminder that there are many ways to view your results, and not all of them mean the same thing.

Exactly – when you look at fatalities per vehicle mile traveled (VMTs), the deadliest days are usually like a Fourth of July when people tend to travel (increasing the VMT) and drink (increasing the risk).