“Zero Party” Data is the Best Party Data

To recap our previous post, zero-party data draws a distinction between first party data (i.e. data you have based on direct interaction with your supporters) that is voluntarily, willingly shared and that which is passively and (often) unknowingly collected. The latter requires inference and assumption, the former is knowing and understanding.

There are three types of zero-party data we recommend starting with and each has a separate, discrete purpose, a separate way to measure it and separate application.

- Donor’s Connection to your mission (this provides a way to unearth motivation)

Do Ask: Identity questions. We can’t list all the detailed measures here but there is commonality within a sub-sector (e.g. health, animal welfare, environment, social, international relief).

Health is probably obvious, the others less so. And the more abstract the connection, the more likely it is you need an indirect, set of items that measure an underlying construct (i.e. a survey scale).

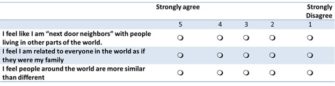

Here is one example for the International Relief sector that allows us to classify people as High/Low Globalists. The High Globalists get messaging that is thematically created from the measure itself.

We’ve done testing that shows using this messaging creates more lift/conversion than the typical ‘need/solution/why you” practice that requires (or should we say,

“suffers”?) knowing nothing about the individual. The only reason the High Globalist cares about your need and solution is because they’ve decided (subconsciously) that supporting you will help reinforce their sense of self and the values that go with being a High Globalist person.

By priming this donor’s Identity with the correct messaging, we activate the donor’s identity and by so doing, “show them we know them” and more explicitly (and easily for the donor) make the case for their “why”, not your organizational “why”.

Don’t Ask: Why did you give to us? It is direct and to the point and if you ask that question people answer it. Problem is that people are largely incapable of the level of self-introspection necessary to tell you their real “why”

If you asked someone who just gave to international relief why they gave they might offer up comments that hint at what is being measured above. But that is a far cry from a reliable, valid scale that you can use.

And if you make the question “closed-ended” and provide response options are you going to make one option “Because I’m a globalist” or “Because I feel people are more similar than different”?

Two problems with this type of question: First, you need all 3 to measure it and how you word it matters. Secondly, by putting the question into the context of “why they gave” it can undermine getting a reliable read as folks, in essence, over-think the question or chose an answer that seems less ‘abstract’ as they mentally wrestle with retrieving relevant information from their rationale self’s and mapping internal judgements onto response options.

- Donor’s Connection to your brand

Do: Commitment Measure.

- I am a committed [CHARITY] donor

- I feel a sense of loyalty to [CHARITY]

- [CHARITY] is my favorite charitable organization

This is a DonorVoice proprietary scale (this is the short version) for measuring the strength of the supporter relationship with your organization. This was a multi-year product development effort that started in the commercial space to develop the scale (and fuller model) with standard, rigorous approaches to measurement, scale development and validation.

[ It is this research, and its application that is described in the chapters on Commitment in Roger’s book Retention Fundraising. You’ll also find a fuller description of the process here on the DonorVoice website.]

Bottom line, it works because we started with a theory or point of view on how the world works and went from there. This approach has predictive, forward looking value with a myriad of applications, not least of which is changing frequency of communications tied to Commitment scores.

Loyalty or Commitment is a mindset, not a behavior. Behavior is an outcome, if your “loyalty” measure includes outcome data then it is circular and lacking any theoretical basis and any practical value other than surfacing “good” donors based on past behavior, as in the use of RFM metrics. Hardly new or insightful.

Don’ts:

- Don’t use Net Promoter. Kevin and Adrian Sargeant published a piece on why this measure sucks

- Don’t rely on a random compilation of attitudinal measures. We started our work to define and measure this thing called Relationship with well over 200 items to measure various parts of the relationship, including over 40 just to measure trust! Randomly picking amongst those, while tempting, is not the path to prosperity.

- Don’t use a random compilation of attitude + behavior. The kitchen sink approach. You can find an infinite number of measures or combination of measures that correlate with behavior – especially if you use past behavior data (input) to predict future behavior (output). That is different than saying they cause it. And being able to say they cause it (which we can with Commitment) means you can also use the measure to get to root-cause analysis.

- Donor’s experience with your brand (one of the places you should collect zero-party data is post interaction –giving, joining, attending, complaining).

If donor experience matters and if you aspire to be “donor-centric” then we’d put a stake in the ground that to do so requires collecting feedback post interaction as an “always-on”, business process. Measuring and managing donor experience is a way to raise money without ever asking for it.

As an illustration, we asked for feedback about the online giving experience and then fixed the user-identified problems that would never be surfaced without this type of data. As a result, page conversion increased from 12% to 33%. Not more asking, just a better, easier process that only comes from collecting user experience data.

The donor experience measure necessarily changes based on the type of interaction (e.g. after online donation, after attending an event, after being canvassed, after receiving a TM call) as it is diagnostic and specific to the interaction.

The main takeaways:

- Take a continuous Census, not a one-time sample. This is not a one and done or project-based exercise.

- The experience feedback has value at the individual supporter level and in aggregate to diagnose systemic issues.

- It needs to be asked shortly after the interaction to maximize data quality and participation rates.

- The feedback process creates other, passive 1st party data that has predictive value – bounce back, unsubscribe, open, click.

Don’t. Don’t not do it. Apologies for the double negative, but these days it’s doubly important to take action and not rely on the same-old-same-old approach to data.

Mindset and culture don’t really eat strategy for breakfast. Instead they dictate what you have for breakfast and how breakfast is prepared (tactics). We need to get into the mindset of getting zero-party data from donors. It’s accurate. It’s proprietary to you. It’s specific to that donor’s needs and desires. It’s a sustainable competitive advantage.

Roger and Kevin

P.S. Over the years we’ve heard most of the objections and seen the resistance to undertaking a serious first party/zero party data effort. We’ll outline those objections in the next post, give you the counterpoints to them and show you with case examples why all this is so well worth the effort.