Are We Improving on Silence? Social Advertising Edition

We talked Monday about how it’s difficult to test something versus nothing, how few do it online, and how eBay found their search engine ads weren’t nearly as effective as they’d thought when they ran a pure test (in fact, losing money) because many of those people would have come to their site anyway.

It won’t surprise you that the same effect is in place for Facebook advertising, although likely not to the same extent.

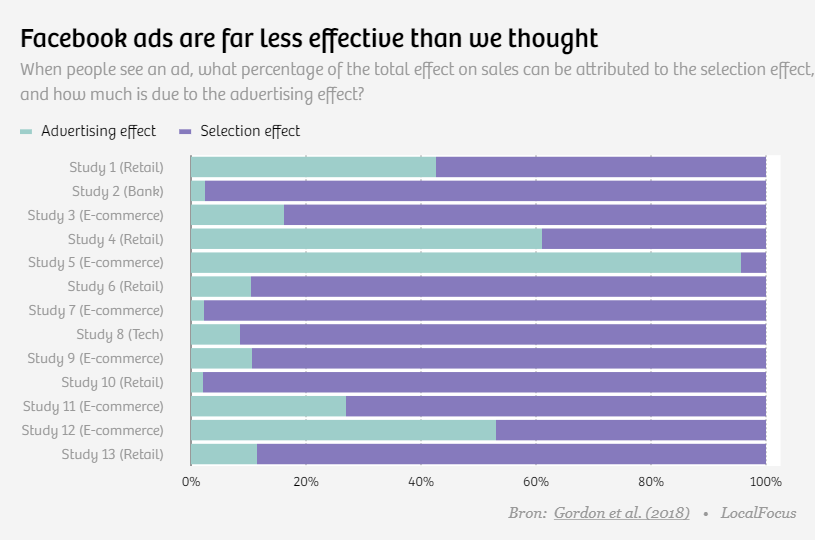

Researchers looked at 15 different tests that large organizations had done on Facebook that had not accounted for how people would substitute organic purchases for advertising-based ones. The full study is here.

The study was gleefully reported on in a piece that wears its thesis in its title: The New Dot-Com Bubble is Here: It’s Called Online Advertising. It’s worth a read, talking about the Google results we discussed on Monday and on the Facebook results noted in this post.. The way it framed the results:

“In seven of the 15 Facebook experiments, advertising effects without selection effects were so small as to be statistically indistinguishable from zero.”

Also from the article:

I would frame this a different way: eight of 15 experiments found that Facebook ads significantly increased purchases at the .05 level (and another two were significant at the .10 level). In other words, although the effect size wasn’t as large as Facebook they would likely have you believe (sometimes 50x more than the actual effect size when the selection bias was taken out), there were still some very strong results. There were also four of five studies that showed Facebook advertising has statistically significant increases in registrations and three of three that showed significant increases in page views.

These researchers didn’t have access to spend and revenue figures, so it’s tough to determine if “statistically significant” also means “positive ROI”, but considering that correcting search engine ads for eBay had no significant effect, that’s an improvement.

Let’s say you wanted to run a proper test on the effectiveness of Facebook ads for you. You’d go to Facebook Ads Center, set up an A/B test, and specify that the B part of the test was nothing – no ads. You’d then see donation behavior between the two groups and get a solid test of your ROI.

I am, of course, lying. Ads Center isn’t set up to do that. Again, very easy to test one ad versus another ad; very difficult to test one ad versus no ad. There’s no money for Facebook in showing you the most accurate ROIs for your ads; in fact, an accurate test would mean less money for them.

So how would you set up your own test? My thinking is that this is most easily done with a co-targeting test with Facebook Custom Audience:

Step 1: Gather the people you want to advertise to (whether lapsed donors or petition signers or people getting a certain mail piece or such).

Step 2: Randomize half of those donors to your ad group and half to receive no ads. (If you want to do this quick and dirty, put all of them in Excel, have it generate a random number between 0 and 1, and advertise to those whose number is .5 or greater.)

Step 3: Upload only the ad group to Facebook. Don’t use any other audience (especially lookalikes, which could easily be people in your control group).

Step 4: At the end of your advertising sprint, look at the donations from the original list you pulled across channels. See what impact the ads had on donations overall and if that was worth the spend.

Not too hard to do, but you must create your own tools to do it.

We’ve focused on online sources of revenue so far – on Friday, we’ll look at other media and how to test something against nothing.

Nick