From Talk to Action: The ROI of Measuring Donor Experience

You can talk about donor experience but unless you’re regularly measuring it than that’s all it will ever be, talk.

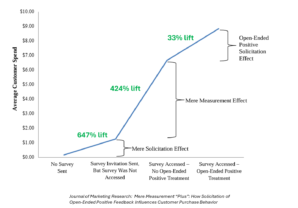

But what’s the return on investment? Is it a just-believe thing? Good and no, respectively. These are experimental results comparing asking for feedback after a customer (donor) interaction versus not. The “not” is likely your organization’s standard operating procedure.

The experiment had several groups,

- Control. Did not receive a request for feedback after the customer interaction.

- The received request for feedback group is broken into three naturally occurring or experimentally altered subgroups,

- Mere Solicitation Effect Group – these people received the request but didn’t give feedback

- Mere Measurement Effect Group – they received and replied to the feedback request

- Open-end question asking for Positives Group – this was an experimental survey condition. The people in this group had the first survey feedback question prompt them open-endedly to share any positives about the customer interaction. This group is compared to the group that did not include this first, positive prompt question.

The spend variable (Y axis) is looking at spend in the 30 day period after the feedback request (or lack of it for the control group). These researchers ran a similar experiment looking at spend over a full year after the single request for feedback and found statistically significant lift.

I’ve added in the percentage change and so even if your mileage varies, there’s a hell of a lot room between these gaudy results and your typical A/B test lift. Why does asking for feedback get people to spend/give more?

- The act of asking for feedback suggests you care. (mere solicitation request)

- Giving feedback causes people to subconsciously self-evaluate and perceived themselves as more committed or interested in the cause. (mere measurement effect)

- Soliciting open-ended positive feedback can create positively biased memories of an experience (positive solicitation effect)

Even those customers who rated the experience as “poor” were positively influenced by the soliciting of positive open-ended feedback spending 55% more than those who also rated the experience as poor but didn’t get the open-end prompt.

But these results undersell the value of feedback even with their eye-popping lift. How so?

- There is more upside to be had if you are responsive to the feedback. Measuring lifts, responding lifts more. All DonorVoice agency of record clients are using our enterprise level, feedback system and platform. This includes automated, tailored replies to those who give feedback. We’re still using business rules, Boolean logic and templates for the auto-generated replies but generative AI opens up a world of possibility for much more tailored, conversational responses.

- You should be asking for more than just transactional experience data. Collecting Commitment (our measure of brand loyalty) and Identity means you can tailor the number of communications and content of them.

Kevin