TESTING: Best Practices- Part 1

In his post Locally or Globally Kevin emphasized the importance of testing that gets you beyond the usual confines of the “locally optimized” (same old, familiar, more of the same) to a ‘globally optimized” (a true re-think of what you’re testing) world of testing.

Over the years we’ve written a lot about testing practices and now’s a good time to review and repeat some of our advice on testing, because one of the great barriers to growth is that few fundraisers have a solid idea of what real testing is all about.

It’s almost axiomatic in direct response undaring that when in doubt, “Let’s test it”. Consequently countless thousands/millions are spent, and the result is too often vapid stargazing, at best. And months and months of time wasted at worst. In short, many talk about testing, few know what testing really means.

In short, much of the testing we’ve seen is nearly worthless. Leads to no conclusions. Costs a lot of money. And yields little or zero when it comes to effecting significant change and valuable insights.

Here’s a summary of insights and recommendations from the Agitator along with a listing of some resources

- Years ago we did a two-part series on Direct Mail Testing for Acquisition (Part 1 here and Part 2 here.) This series covers the basic mistakes most nonprofits make in testing.

- We followed that series up with a post titled Direct Mail Testing to Nowhere, where we once more warned that while the logic of the simple A/B test is sound, it is incredibly inefficient and unproductive. Given the usual manner in which this type of testing is conducted, it’s slow, painstaking and amounts to little more than a nudge forward. The affliction of massive incrementalism.

- So, we tried again and outlined the following recommendation:

How to Conduct a Purposeful and Strategic Test

Assuming you’re testing with growth and breakthroughs as your goal and not just going through the motions, what is the proper way to test? How do we break the all-too-common pattern of timid take-little-risk that infects both agencies and nonprofits? An infection that ends up with testing only the marginal and incremental. Orange vs. blue envelopes … this letter signer vs. that letter signer … $25 vs. $45 … and sizes of envelopes. Incrementalism to nowhere.

QUESTION: How do we conduct testing that is truly strategic and purposeful rather than habitual?

ANSWER: With discipline in the form of a proper plan and by meticulously following proper guidelines and methodologies for each test.

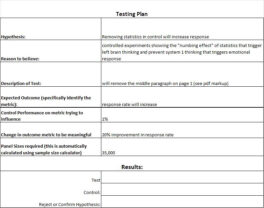

I’ve taken the Testing Plan and Protocol used by DonorVoice as a real-life example of proper testing. Feel free to copy it. More importantly, please use it. (In fact you might ask your consultant or agency to show you the process they use and compare notes.)

First let’s start with an illustration of the Worksheet DonorVoice uses for putting together each and every test they’re involved with. [Click to enlarge.]

This Testing Worksheet/Planning tool is used by the DonorVoice team in conjunction with the following 10-point framework or protocol. Kevin notes, “This testing protocol will lead to far fewer and more meaningful tests (a big plus), and more definitive decision-making regarding outcomes (another big plus).”

1) Allocate 25% of your acquisition and house file budget to testing.

2) Of the 25%, put 10% into incremental and 15% into big ideas.

An important corollary here: some of this money should go into researching ideas or paying others to do it. You can even use the online environment, for example Facebook ads, to pre-vet ideas with small, quick tests of the ideas to gather data.

3) Set guidelines for expected improvement.

Any idea for incremental testing must deliver a 5% (or better) improvement in house results, and 10% in acquisition (we’ll see why the difference in minute). Any idea considered “breakthrough” must deliver a 20% increase (or better).

4) Any idea – incremental or breakthrough – must have a ‘reason to believe’ case made that relies on theory of how people make decisions, publicly available experimental test results, or past test results within the organization.

The ‘reason to believe’ must include whether the idea is designed to improve response or average gift or both – this will be the metric(s) on which performance is evaluated. (Note: for small organizations, with limited volumes and budgets you may want to concentrate on testing to improve gift size.)

A major part of this protocol is guided by the view that far more time should be spent on generation of test ideas and therefore, creating the necessary ‘rules’ and incentives to create this outcome.

This may very well result in 3 to 5 tests per year. If they are well conceived and vetted, that is a great outcome.

5) Determine test volume with math, not arbitrary, ‘best practice’ test panels of 25,000 (or whatever).

Use one of many web-based calculators (and underlying, simple statistical formulas). Here is one DonorVoice likes, but there are plenty – all free.

An acquisition example: if our control response rate is 1% and we want to be able to flag a 5% improvement – i.e. response rate greater than 1.05% – to say it is real, the test size would need to be 626,231 (at 80% power and 95% confidence and 2-tail test). That 626,231 is not a typo.

How many acquisition test panels have been used in the history of nonprofit DM that are producing meaningless results because of all the statistical noise? A sizeable majority, at least.

6) Do not create a ‘random nth’ control panel that matches the test cell size for comparison.

I don’t know how many nonprofits and agencies employ this approach but it can lead to drawing the exact wrong answer on whether the test lost or won.

The problem with the ‘random nth’ control test panel of equal size to the test – e.g. two panels drawn with random nth at 25,000 each – is that this creates a point of comparison that has its own statistical noise and far more than the main control with all the volume on it. There are a few retorts or excuses that have surfaced in defense of this practice, but they are simply off base.

7) Determine winners and losers with math, not eyeballing it.

Use one of many web-based calculators to input test and control performance and statistically declare a winner or loser. Again, here’s DonorVoice’s free choice.

8) Declare a test a winner or loser.

Add results to the ‘reason to believe’ document; maintain a searchable archive.

9) All winners go full volume rollout.

10) Losers can be resurfaced and changed with a revised ‘reason to believe’ case.

Denny Hatch, one of the best copywriters and direct mail veterans in the business and former editor of Business Common Sense, reminds us of the late Ed Mayer’s admonition: “Don’t test whispers.” Meaning, small, incremental changes (‘whispers’) produce only incremental results not worth whispering about, let alone shouting about.

Whether up or down, tiny changes hardly matter, and they cost lots of time and money. So, put the DonorVoice testing discipline, or one as rigorous, to work for your future.

Please don’t hesitate to share your testing experiences.

Roger

P.S. Next week we’ll post Testing: Part 2. How to test more than one thing at a time.

Meanwhile, …if you have a sustainer program … have one in its infancy…or are thinking of stating one ….

… and especially, if you’re involved with a food bank, animal shelter or rescue mission and live on the front lines…

THEN MARK YOUR CALENDAR FOR FEBRUARY 11thAT 1PM EASTERN.

That’s when DonorVoice, The Atlanta Humane Society, and One&All will offer a FREE, thought-provoking and practical session on how to apply behavioral science to boost your sustainer program’s conversion and retention rates.

REGISTRATION IS FREE. SIGN UP HERE

What You’ll Learn

1)How and why to make the case for a multi-channel sustainer program from One&All, an agency with specialization in all 3 verticals and loads of proof on the value of these programs.

2) DonorVoice will provide the “101” and the “400-level” details on using behavioral science to build a high quantity & high-quality sustainer program.

3) How to measure and use supporter satisfaction, Commitment and Identity data to tailor supporter experience for increased retention

4) A chance to hear and learn from the Atlanta Humane Society on their experience building a successful, door-to-door canvassing and telefundraising operation for new donors, reinstates and financial conversion among key constituencies like adopters.

I think it’s worth mentioning that those smaller nonprofits that send out lower levels of direct mail, are not conducive to testing as much as those with larger direct mail programs. You absolutely need to have a high enough volume to test at all. Otherwise (as our mail house pro once pointed out), test results cannot be counted on to identify/prove anything. The sad part is that I’ve worked with smaller nonprofits that insist on testing (to be ever so clever to their boards), but only mail out like 10,000 pieces at a time – and half of that number in the control group is hardly helpful and might even point the agency in the wrong direction because results cannot be relied on. So the ‘numbers’ formulas should really be used and adhered to.

As a non-profit mailing out 10,000 pieces or less at a time (divided between French and English donors, as we are based in Montreal), I totally agree that our numbers are not enough to run meaningful tests. We end up comparing our results with a previous year, which is not a reliable method either. For instance our year-end mailing had great results, the best we’ve had in years. For the first time, we had a matching gift offer. How do I explain the results: the matching gift offer or the Covid-19 context (we are a hospital foundation)? A bit of both, probably.

I have been looking for best testing practices for small organizations. Does anyone have suggestions?