TESTING: Best Practices-Part 2

It may well be time for you to break the “test only one thing at a time” rule. This is especially true if you’re trying to move from local improvement to a globally significant breakthrough as covered in Kevin’s earlier post (Locally or Globally?)

Perhaps you’ll want to do some message testing on donor Identity…or Personas…or ask strings…or some of the other behavioral science findings we’ve covered. If so, you may despair over the dangers of too simplistic a testing approach doubly fearful the amount of time it will take to test each element and equally concerned about the large quantities required.

Here’s the good news. If you embrace larger-scale testing, you can break the famous “test only one thing at a time” rule. Some time back Nick Ellinger, drew this valuable analogy that I found helpful.

Let’s say that instead of trying to get the highest response rate possible, you were trying to reach the highest point on Earth. The trick is that you don’t know where it is, how high it is, or whether you are in a place that’s already pretty high or right at sea level.

Testing one variable at a time is like going out your door with a good set of binoculars. You climb to the highest point you can see, then look again and go to the highest point again. You repeat until you are at the top point you can possibly see.

It’s a good iterative approach that will get you higher than you were before. But if you started in Indiana, your best hope would be to get to the top of Hoosier Hill, a whopping 1257 feet high. This is a local maximum. You are “optimized,” in that you can’t get higher by doing anything nearby.

What you really want is a global maximum, the best you can possibly do. But unless you are in the Himalayas already, local optimization will not get you there. The odds of you being in the Himalayas, of any possible land location on Earth, are vanishingly small.

So if you want to get to Mount Everest, then local optimization – changing one variable at a time – won’t do it.

To optimize globally, you must try some different (sometimes very different) things. It would start with a large-scale hypothesis about your donors (e.g., instead of responding to this part of our mission, they will respond to a different part) and making a wholesale communication test in sufficient quantity to see if it works.

But frankly, this is the equivalent of spinning the globe and putting your finger on a location. Unfortunately in this case, two-thirds of the Earth’s surface is covered in water. So, clearly, this type of global testing is risky. Even if you have good knowledge about your donors and could limit your search to just land masses, you could end up in Florida, where the highest point is 345 feet tall.

The point is that these large-scale risks are scary. That’s why the Hoosier Hill approach doesn’t look too bad, especially with the budget they’ve given you to work with. But if you do end up in the Rockies, Alps, Himalayas, etc. with your big risks, then global optimization can help you go from foothills to serious peaks.

There are some ways to mitigate your risks while still testing big ideas. One is to have a portfolio approach to your risk. As noted earlier in Testing: Part 1 the DonorVoice team recommends allocating 15% of your volume to large-scale testing and 10% to incremental improvements. This allows you to progress up the hill you are on while you scout the terrain for other worlds to conquer.

Another significant route to the peaks is to have a clear idea of why your donors give. In earlier posts we’ve stressed the importance of learning about your donors through the onboarding process and the types of information you’d want to collect.

If you have information like the identities of your donors, their commitment levels, and what makes them committed to your organization, your risk in creating a communication that is significantly different from your usual is significantly decreased. In fact, it’s exactly the type of approach you should be taking.

Finally, there are ways to test multiple variables simultaneously even before a donor sees a live communication. The Agitator Toolbox contains one such solution here . It’s highly accurate, fast and inexpensive technology for testing literally hundreds or even thousands of variables at a time. You might want to explore this. It’s why we labeled it “18 Months’ Worth of Testing in a Day.”

Here’s a short video summarizing the process

Using a panel of your donors, advanced survey techniques, and some sophisticated statistical analysis, this tool can tell you what images, messages, and themes are worth testing in live media (and which aren’t). No wonder one user described it as “18 months worth of testing in a day.”

Most organizations should acknowledge that the process to determine what gets tested is anything but empirical, rigorous or efficient. More typically the process borders on the haphazard, with an abundance of caution and conventional wisdom thrown in.

It doesn’t have to be this way. Fortunately, our commercial product development brethren can point to the solution. Using a multivariate, survey-based methodology, nonprofits can pre-identify the best test ideas … those most likely to compete with and beat the control.

By taking this scientific, disciplined route, nonprofits can greatly reduce cost by NOT mailing test packages likely to perform poorly and increase net revenue by increasing volume on likely winners.

HOW PRE-IDENTIFICATION WORKS

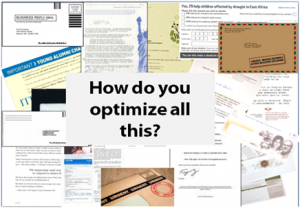

The pre-identification of likely winners and losers is done in two parts. Here the illustration involves direct mail, but can be done for any channel:

1) First, surveying donors who are representative of those who will receive the actual mailing, showing them visuals of the direct mail package and measuring preferences using a very specific and battle-tested methodology.

2) Next, using the survey data to build a statistical model to assign a score to every single element that was evaluated.

This methodology is well established and used by large, consumer companies (e.g., Coca Cola, General Mills, Proctor & Gamble) to guide product development for many of the sodas, cereals and detergents on grocery store shelves.

I know it works in the nonprofit sector as well because DonorVoice has successfully used it for a number of large and small nonprofits. You can see a short video of how the process works and also more detailed information by clicking here to get to the section in The Agitator Toolbox.

So, to the idea of testing one variable at a time: a fine idea as far as it goes. But to get to the best donor communication and fundraising you can possibly have, you probably need to take broader, higher leaps.

What’s your experience with broader testing?

Roger

P.S. Just one more day til the upcoming sustainer webinar. Learn how we can go from the false choice between Quantity or Quality of donors. Hear from The Atlanta Humane Society, One&All and DonorVoice on how to apply behavioral science to get rid of your “Or” problem.

REGISTRATION IS FREE. SIGN UP HERE

“Ive seen fire and I’ve seen rain…”

And I’ve seen folks who think a 4.75 response beat a 4.62 response on a test base of 400.

So while all these steps are great, let’s not forget that old thing called “statistical significance.”

And, thanks, Roger for keeping us on track!