What Impact Messaging Works Best? The Goldilocks Finding

One of humanity’s basic psychological needs is a sense of competence or efficacy.

Putting time in on something, feeling like you suck at it and are getting no better, and then receiving no feedback or negative feedback undermines your motivation to keep doing it.

This includes charitable giving.

The donor’s sense of competence and efficacy (or lack of it) can manifest in a variety of ways but not least of which is the communication around the impact the giving will have as part of the solicitation. Make it clear the money will do some good and the donor can feel a sense of efficacy or competence in their decision.

Of course the question is, what’s the best way to communicate this?

What Impact Messaging Motivates Donors to Give

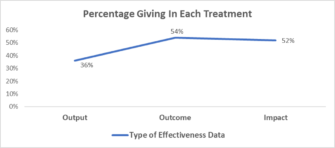

A recent academic experiment looked to provide more insight on what kind of impact messaging works best in motivating people to give. The experiment tested three kinds of effectiveness indicator data that varied by the immediacy of the benefit cited.

Participants were randomly assigned to one of three treatments –all using the same campaign appeal and ask–the only difference being the type of effectiveness data included.

There is a big increase in giving if you get beyond the uber-simple counting approach (number of people trained) which is a very common effectiveness indicator in charity appeals because it is the simplest to gather.

This experiment suggests going one “level” deeper to Outcome data (people employed after training) is well worth the time and effort on the program side.

However, as Goldilocks could have told us, the middle option is best; there is no upside in getting the longer-term, harder to claim, harder to source Impact data (income gains; drop in poverty).

This finding will not sit well with the effective altruism movement that wants donors to do more critical thinking and evaluation to focus not on widgets off the assembly line (i.e. Output) but material, social impact.

The news for that crowd is about to get worse. This experiment didn’t stop here. A further hypothesis is that the type of effectiveness data that drives donating behavior might differ for different people. Sure, in aggregate, the Goldilocks option works best. But might there be some people who need, want and prefer Impact data to feel competent and efficacious?

Randomly slicing and dicing people by demographics or channel of acquisition or other superficial views of humanity to find these people is a needle in the haystack approach, or worse.

Instead this experiment does what we at DonorVoice preach and practice; digging deeper and using subject matter expertise on the why of behavior to develop tests that don’t incorrectly assume everyone is the same.

Specifically, this study hypothesized that some donors are naturally more inclined to a reflective, effortful, conscious approach to decision making while others are more intuitive, ‘gut-feel’ decision makers. They further hypothesized that the more reflective decision makers would prefer the deeper Impact data – a preference ‘hidden’ in the aggregate bar showing is no better than Outcome data.

Alas, even these more reflective people (measured with a validated survey scale) were satisfied with Output data.

This study, like all good experiments with theory behind them, also sheds light on the ‘why’ of the findings.

People prefer Outcome data over Output because the former creates a greater sense of trust, perceived innovativeness and perceived social good.

But, donor decision making seems to skew towards the intuitive, even for people who are more naturally reflective, effortful thinkers; hence the reason Impact data does not provide any extra value to donors.

Kevin

Kevin, I have often wondered why more outcomes are not shared in both appeal and thank you letters. My guess is that many charities are not comfortable with their ability to measure accurately such outcomes.

Do you have any further data or thoughts about that?

Thanks again, for planting such seeds of wisdom!

Jay, I expect you’re right and anecdotally do hear about the difficulty of getting outcome (vs. output) data, much less Impact data (which is hard to control for other factors and lay claim to more concrete, social outcome metrics – e.g. reduced mortality, lower poverty rate.)

Kevin, can you please cite the experiment/research referenced? Would love to review it in total. Thanks!

Jono,

Here is the article title, “Do You Like What You See? How Nonprofit Campaigns With Output, Outcome, and Impact Effectiveness Indicators Influence Charitable Behavior”. Authors are Bodem-Schrötgens, Jutta; Becker, Annika. And it was published in Nonprofit and Voluntary Sector Quarterly, Volume 49 (2): 20 – Apr 1, 2020.