Trust in the Eye of the Beholder?

We do a lot of surveys. Heck, I’ve got an advanced degree in Survey Methodology, whatever the hell that means.

Surveys are ubiquitous it seems, especially in politics and public policy. Your organization might do a survey for constituent understanding or for public release to advocate for this or that cause.

What makes surveys trustworthy to your internal or external consumer? There are a lot of methodology details that might be used as proxy for survey quality. Sample size is a favorite. What about just stating it’s “representative”? Or that the respondents were chosen randomly?

We’d like to think we’re sophisticated consumers judging the quality of the survey on these objective, methodological shorthands.

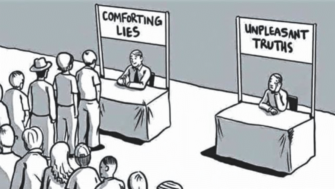

But how do these methodology details stack up against our human bias? You know, finding surveys untrustworthy if they contradict our existing beliefs?

In a recent experiment testing 48 different survey introductions providing varying amounts of methodological detail ranging from none to lots the researchers found these quality indicators matter very little in our trustworthiness judgment.

Instead, if the survey result matches what I already believe, I trust it, if not, I reject it.

Demoralizing but true.

Having said that, there is a tiny Trust-Bump by having a sample size of 1,000 vs. 100 and by describing it as “representative”.

Kevin