Your Campaign Results Report is Wrong and It’s Unavoidable

You know those funhouse mirrors that distort your reflection? One makes you tall and thin, another short and wide. That’s how most campaign reporting works. The numbers might look impressive or disappointing but they never show the real picture.

Your Numbers Are Lying (And it’s not their fault)

Here’s the thing: all campaign-level reporting is wrong—and I mean all of it. The only difference is in how wrong and in which direction.

- If your November mailing brought in $50k, did it really?

- What if someone donated online after seeing the mailing? Does that count as mail revenue?

- What if their decision was nudged by your Facebook ad from three days earlier?

- Worse, what if your mailing just pulled forward dollars that would have come in December anyway? Congratulations—you didn’t raise more; you just sped up the inevitable.

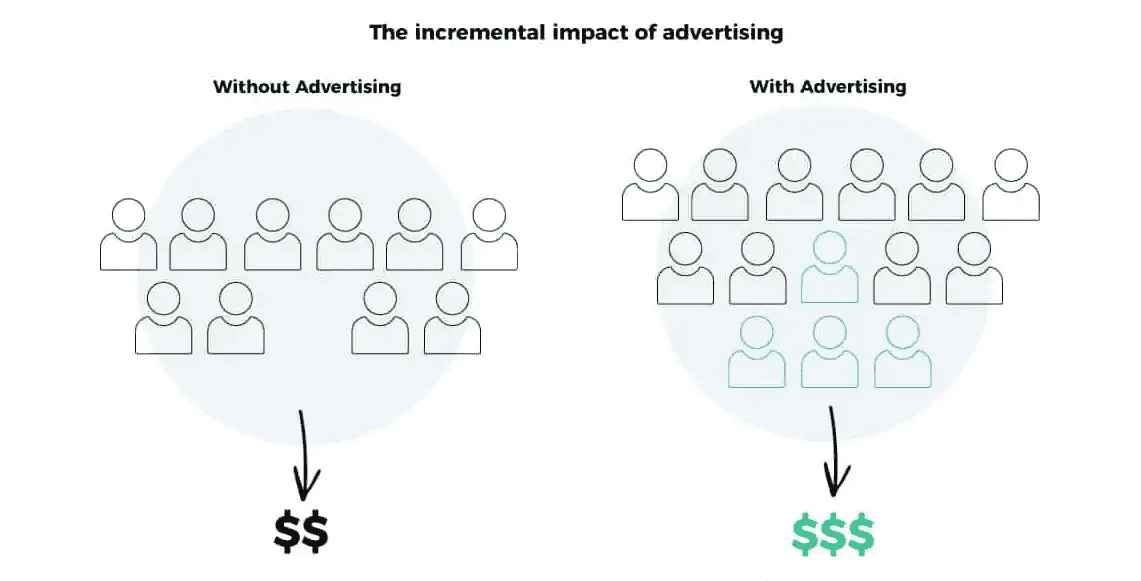

The Nov campaign report will never tell you how much of that revenue is truly incremental—how much is extra money your organization wouldn’t have seen otherwise. And without knowing that, you’re flying blind.

Incrementality Tests: The Only Honest Answer

To find out if your campaign generated extra revenue, you need to run a simple test:

- Group A gets the mailing.

- Group B doesn’t.

- Then you compare total revenue for each group—not just the checks mailed back, not just the revenue attributed to one channel or two, but everything.

This is how you cut through the noise. It’s not flashy, but it works.

An Illustration

Let’s say you mail 10,000 people in November. You also hold back 10,000 similar people (matched on donor history, frequency, etc.) who don’t get the mailing. Here’s what happens:

- Test Group (Mailed): $50,000 in total revenue for Q4.

- Control Group (Unmailed): $40,000 in total revenue for Q4.

The difference? $10,000. That’s your incremental revenue. Now, subtract the cost of the mailing ($10,000 at $1 per piece):

Incremental ROI = (Incremental Revenue−Cost)/Cost

Incremental ROI = 0%

You didn’t lose money, but you didn’t add value either. And this breakeven test saved you from patting yourself on the back for what you might’ve assumed was a killer campaign.

There are models, Marketing Mix Models (MMM), designed to measure incrementality across channels by isolating the impact of each channel on total revenue. We’ll share some details on these in subsequent posts as they do get one closer to understanding the relationship between spend across channels and total revenue.

But, like all models and campaign reports, they are also wrong, but potentially useful. To make them fully useful you have to use them to make changes in how much and/or how you spend across channels and see how MMM projections fare against the cold, unforgiving real-world.

You didn’t raise whatever you said you raised on Campaign A. Accepting this, caring about it and trying to be less wrong is the difference between putting yourself on a growth path vs. moving your arms and legs faster to keep treading the same water.

Kevin

Thank you for ‘splaining this to me as if you know exactly what I needed to hear. The closing paragraph brought a smile to my face.

Thanks for the read and comment Jim.